Dans le sud de l'Irak découverte d'une "taverne" sumérienne vieille de 5 000 ans.

Users say Microsoft's Bing chatbot gets defensive and testy

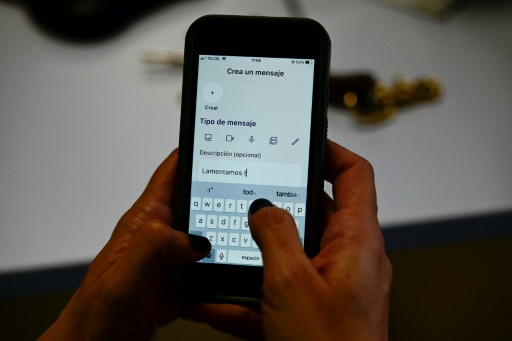

[Article] Le chatbot de Bing, le moteur de recherche de Microsoft, donne des réponses parfois farfelues.

Si vous ne connaissez pas un mot, surlignez-le avec votre souris et cliquez sur “Translate” pour le traduire dans la langue de votre choix.

Profitez de la lecture automatique avec accent avec un abonnement

Users say Microsoft's Bing chatbot gets defensive and testy.San Francisco (AFP) - Microsoft's fledgling Bing chatbot can go off the rails at times, denying obvious facts and chiding users, according to exchanges being shared online by developers testing the AI creation.A forum at Reddit devoted to the...

Accédez à l'intégralité de l'article, choisissez un abonnement

People testing Microsoft's Bing chatbot -- designed to be informative and conversational -- say it has denied facts and even the current year in defensive exchanges © AFP Jason Redmond